Decoding Generative AI Basics : Content

Part II : A Simple Guide on how Transformer Models generates content

Quick Recap of Part I: In the previous section, we explored how some of the key concepts of the transformer model help build context. This could be attention, positional encoding, etc. You can find Part I here: Decoding Generative AI Basics: Context . Now let’s dive into Part II .

How Do Humans Generate Content?

Let us start this discussion with a list of questions. How do you think humans generate content? How do you decide what you want to say? Or let us simplify it: How do you answer the questions you receive in an exam?

In this case, let us assume you studied the answer to the question asked. During your preparation, you build context. You read and understand the material, figuring out how one word connects to others to grasp the full meaning of a sentence. Then you read the next sentence, doing the same, and gradually connect all the sentences. This logical progression forms the explanation for a specific question.

For example, if the question is, "What is the capital of the USA?" and you’ve read that the answer is "Washington DC," you probably respond confidently with that answer. The probability of answering such definitive questions correctly is high.

Now, consider a different type of question: "How do you think about your experience as an engineer in a so-and-so company?" This is not a question with a definitive answer you memorized. Immediately, you don’t list bullet points. Instead, you use your cognitive ability to filter relevant information for the question.

You draw from your experience, which acts as your context here. Depending on the number of years you’ve spent in the company, you’ll have a broader variety of ideas to share. You first identify a key idea. At this stage, you may not have figured out the exact words to convey your thoughts, but you know the outcome you’re aiming for. As you speak, you figure out the right choice of words, one after another, to get there.

If the task is to share a fictional story, you don’t rely on factual context. Instead, you create a vivid and imaginative story—perhaps about a land of unicorns—mixing real-world elements with creative ideas to craft a compelling narrative.

Drawing Parallels to Machine Learning Models

Why am I spending so much time explaining how humans speak? It’s because this process draws an interesting analogy to how machine learning models generate content today.

There are many technical concepts involved, but let us start with a simple example to illustrate a machine model better: linear regression.

A Simple Example: Linear Regression

Imagine a scenario where a student’s study hours influence their exam scores. The relationship can be represented with this equation:

The simple linear regression equation is:

y = β0+β1x+ϵy

y: Dependent variable (exam score).

x: Independent variable (study hours).

β0: Intercept (score when study hours = 0).

β1: Slope (how much the score increases per additional hour studied).

ϵ: Error term (unexplained variance).

The model is trained using historical data to learn the pattern of how study hours affect scores. With this training, the model updates the parameters β0 and β1 to predict potential scores for given study hours.

Now, how does this relate to content generation? Just as the parameters β0 and β1 are tuned in linear regression, machine learning models update numerous parameters—called weights—during training.

Weights

When humans build context, we connect words and sentences to form meaning. Similarly, transformer-based models build context using weights (we didn’t cover this in Part I but these play a significant part in this process) :

Embedding Layer Weights: These associate each token in the vocabulary with a high-dimensional vector. A word’s representation may differ depending on the dataset the model was trained on.

Attention Weights: These help the model determine how different words relate to one another:

Query (Q): How relevant are other tokens to this one?

Key (K): What information does this token offer?

Value (V): What information can this token share if selected?

For example, in the sentence "I hit a six with a red bat," attention weights help the model understand how "red" and "bat" are connected, improving its contextual understanding.

3. Positional Encoding Weights: These allow the model to understand word order, adding depth to the context.

Now, Let us move on to weights that help to generate the content.

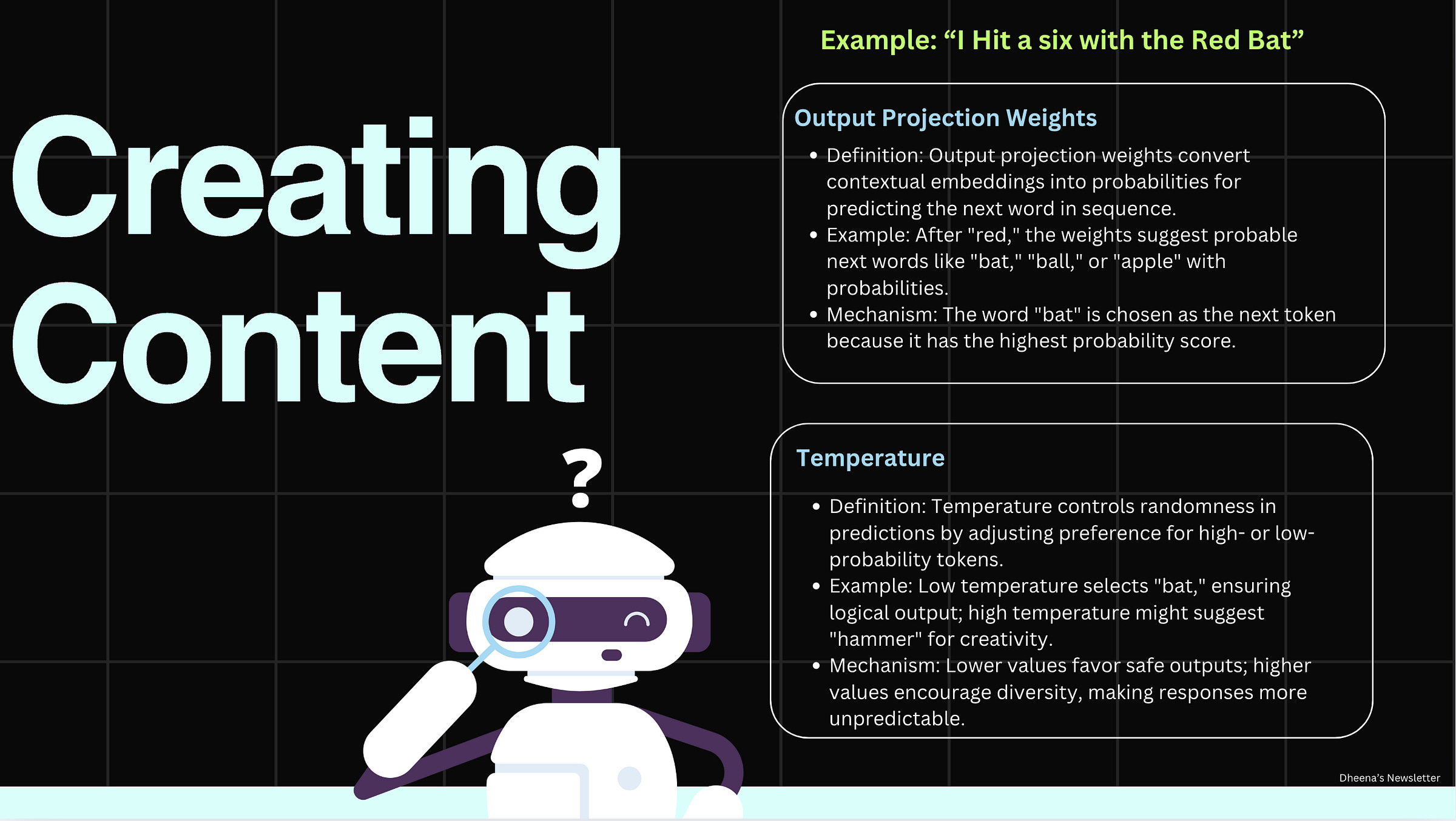

Once the context is built, output projection weights come into play. These weights convert contextual embeddings into probabilities for each word in the vocabulary.

For example, if the input is "I hit a six with the red...", the model evaluates possible next words like:

bat: 0.65

ball: 0.20

apple: 0.05

The word with the highest probability (bat) is chosen to complete the sentence as "I hit a six with the red bat."

If the model hasn't been trained on specific information, such as "The capital of the USA is Washington, D.C.," it may hallucinate, generating a plausible but incorrect response. This occurs because the model selects tokens with the highest probability based on its training data. However, if the training data lacks the factual answer to a particular question, the most probable response may not be accurate.

To address this limitation, organizations often employ task-specific models or fine-tune general models with domain-specific data or ground the data with relevant resource. These techniques helps the model to learn relevant information and generate more accurate and relevant responses. By adjusting the model's weights during fine-tuning, it can be better equipped to handle specific tasks and queries. The choice between finetuning or grouding depends on the situation, use case , time to solution and many other factors.

Earlier, we discussed how output projection weights are used to generate a list of weights, each representing the probability of a specific token being the next word. Once these probabilities are calculated, a process called "Token sampling\Decoding" is employed to select the most likely token.

Greedy Decoding

The model selects the token with the highest probability. For example, if the word "bat" in the output projection weights has the highest probability (0.70), the model predicts this word as the next token to be generated, completing the sentence as "I hit a six with the bat."

Beam Search Decoding

Beam search considers multiple possible continuations (beams) simultaneously. It keeps track of the top-k most likely sequences at each step. The sequence with the highest cumulative probability across all tokens is ultimately selected.

Example:

Top 3 beams after the first token:

"I hit a six with the red bat" (Score: 0.75)

"I hit a six with the red ball" (Score: 0.65)

"I hit a six with the red apple" (Score: 0.30)

Now, remember there are several more types of decoding techniques, each with its pros and cons. For example, greedy decoding works best for Q&A tasks, as it selects the highest-probability token. On the other hand, beam search excels in summarization, as it provides multiple top-k options, allowing the most suitable summary to be selected.

You might be thinking, "Okay, so models generate content by predicting the next most likely word based on their training data, repeating this process to form a complete answer." But how do models generate creative stories? This is where a concept called "temperature" comes into play.

Adding Creativity: The Role of Temperature

Temperature adds controlled randomness to the process by adjusting how sharply the model favors high-probability tokens:

High Temperature: Produces more diverse and creative outputs by flattening the probabilities. For example, "I hit a six with Thor’s hammer."

Low Temperature: Produces safer, more deterministic outputs, such as "I hit a six with the bat."

This helps the model be creative in storytelling. Imagine setting the temperature high; the model could say something whimsical, like "I hit a six with Thor’s hammer." While it isn’t logical in the real world, it’s interesting and engaging in a creative space.

This is analogous to humans weaving creative stories—drawing from real-world elements but mixing in unusual ones to make the narrative unique and compelling.

Final Thoughts

We began this journey by reflecting on how humans generate content—from answering straightforward Q&A questions with certainty, to drawing on personal experiences, and finally, to crafting imaginative stories. Similarly, language models follow a comparable process: building context, leveraging prior knowledge, and selecting the most relevant words or tokens to produce coherent responses to reach an outcome that answers the specific query. Humans rely on their experiences and learned knowledge, while models depend on the training weights and mechanisms like embedding layers, attention scores, and token sampling to create meaningful outputs.

This parallel not only highlights the ingenuity of human cognition but also underscores the remarkable progress in artificial intelligence. Just as humans blend logic with creativity to tell compelling stories, AI, through mechanisms like temperature control, can emulate creativity by generating diverse and unexpected content. This shared pursuit of meaningful expression—whether by humans or machines—bridges the gap between natural and machine-generated language, opening new avenues for innovation and storytelling.

Note: This article doesn’t try to explain the architecture of the Transformer model in detail. It focuses on simplifying some core concepts of what it entails and why it has been truly transformative.

Sources